timelapse 3

- was going through lilian weng policy grad blog

- covered till a3c, went through pg theorem proof & found another great blog

- next up is dpg, ddpg & d4pg

- haven't implemented them only theory as of now but will do it ofc

- & this timelapse looks good niceee :) https://t.co/hyt6yB4mQi

- was going through lilian weng policy grad blog

- covered till a3c, went through pg theorem proof & found another great blog

- next up is dpg, ddpg & d4pg

- haven't implemented them only theory as of now but will do it ofc

- & this timelapse looks good niceee :) https://t.co/hyt6yB4mQi

6

1

62

15.7K

6

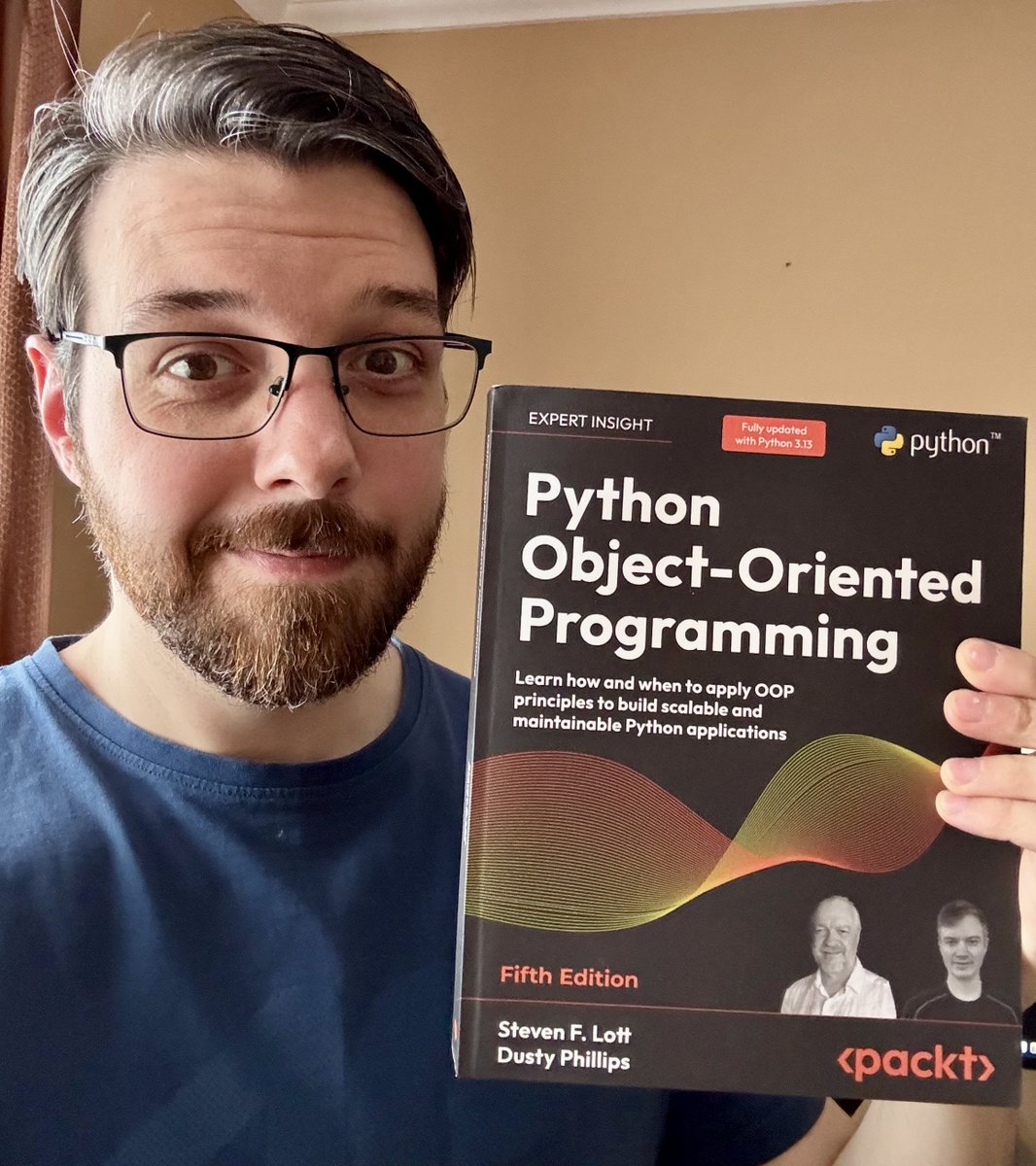

AI Engineers: stop shipping notebook spaghetti into production!

Python Object-Oriented Programming (5th Edition) is a practical guide that teaches you how (and when) to apply OOP to build scalable, maintainable Python applications.

I reviewed this book because I keep reminding https://t.co/95SSTUs8QM

Python Object-Oriented Programming (5th Edition) is a practical guide that teaches you how (and when) to apply OOP to build scalable, maintainable Python applications.

I reviewed this book because I keep reminding https://t.co/95SSTUs8QM

1

13

126

26.3K

87

64.3K

Total Members

+ 12

24h Growth

+ 144

7d Growth

Date Members Change

Feb 10, 2026 64.3K +12

Feb 9, 2026 64.3K +38

Feb 8, 2026 64.3K +14

Feb 7, 2026 64.2K +20

Feb 6, 2026 64.2K +33

Feb 5, 2026 64.2K +27

Feb 4, 2026 64.2K +16

Feb 3, 2026 64.1K +17

Feb 2, 2026 64.1K +25

Feb 1, 2026 64.1K +9

Jan 31, 2026 64.1K +13

Jan 30, 2026 64.1K +18

Jan 29, 2026 64.1K +21

Jan 28, 2026 64K —

No reviews yet

Be the first to share your experience!

Share Your Experience

Sign in with X to leave a review and help others discover great communities

Login with XLoading...

Community Rules

Be kind and respectful.

Keep Tweets on topic.

Explore and share.