I built @kalibr_ai because my agents were super unreliable in prod. Kalibr is an autonomous routing system designed to keep your agents running in prod.

Most agents hardcode one path: model → tool → tool.

When that path fails, your agent fails.

Kalibr canary tests multiple https://t.co/3THuHdqYQn

Most agents hardcode one path: model → tool → tool.

When that path fails, your agent fails.

Kalibr canary tests multiple https://t.co/3THuHdqYQn

0

1

8

856

0

Thread🧵1/4

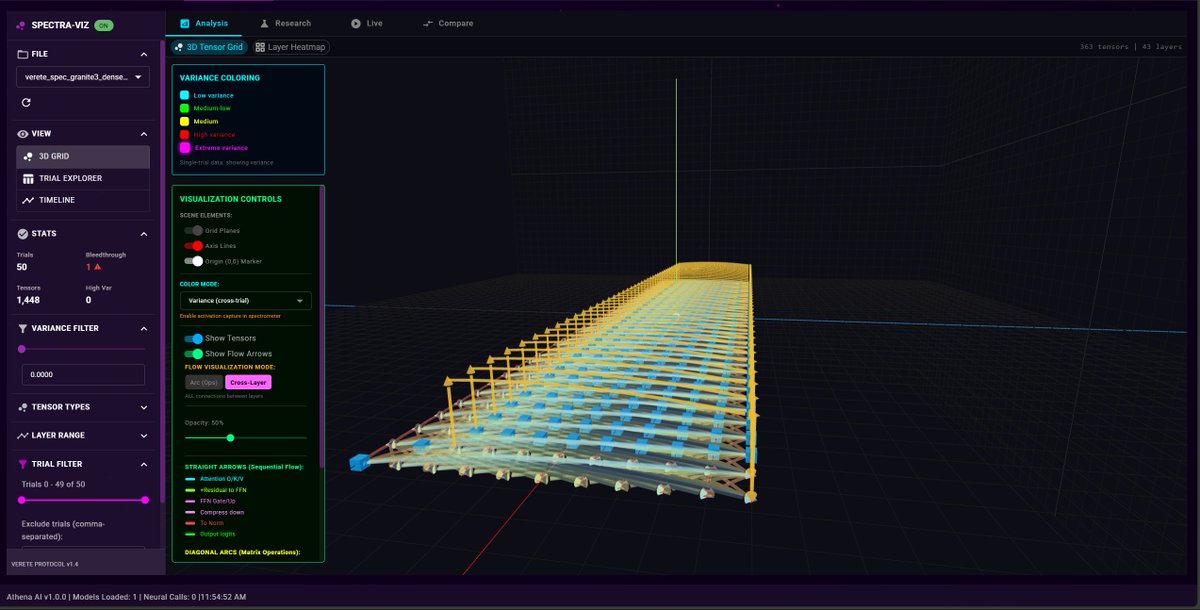

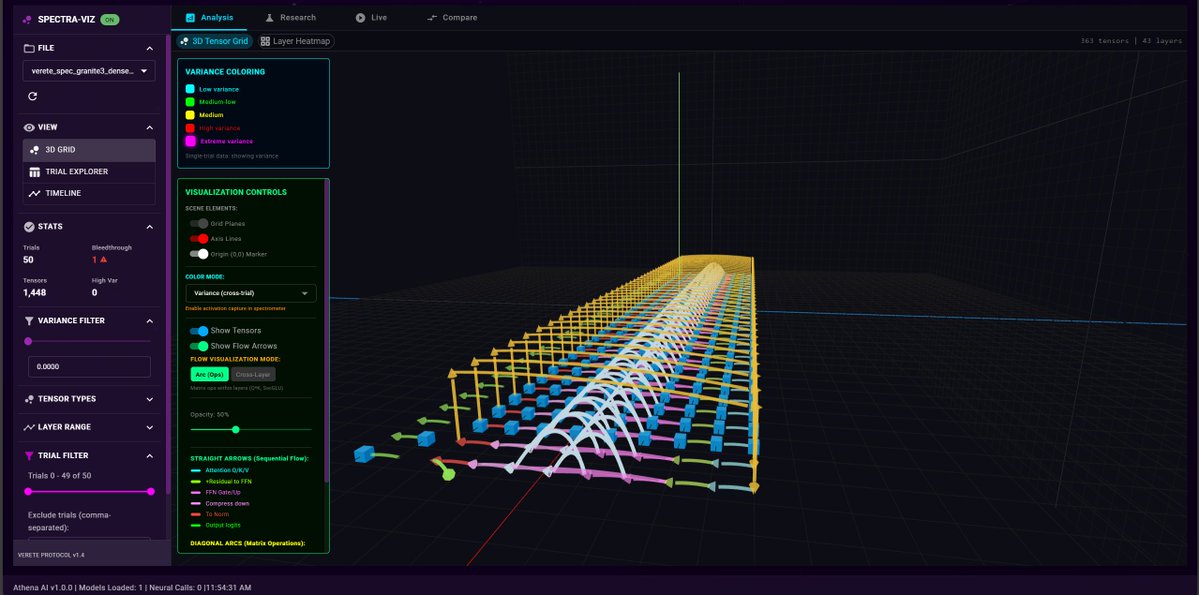

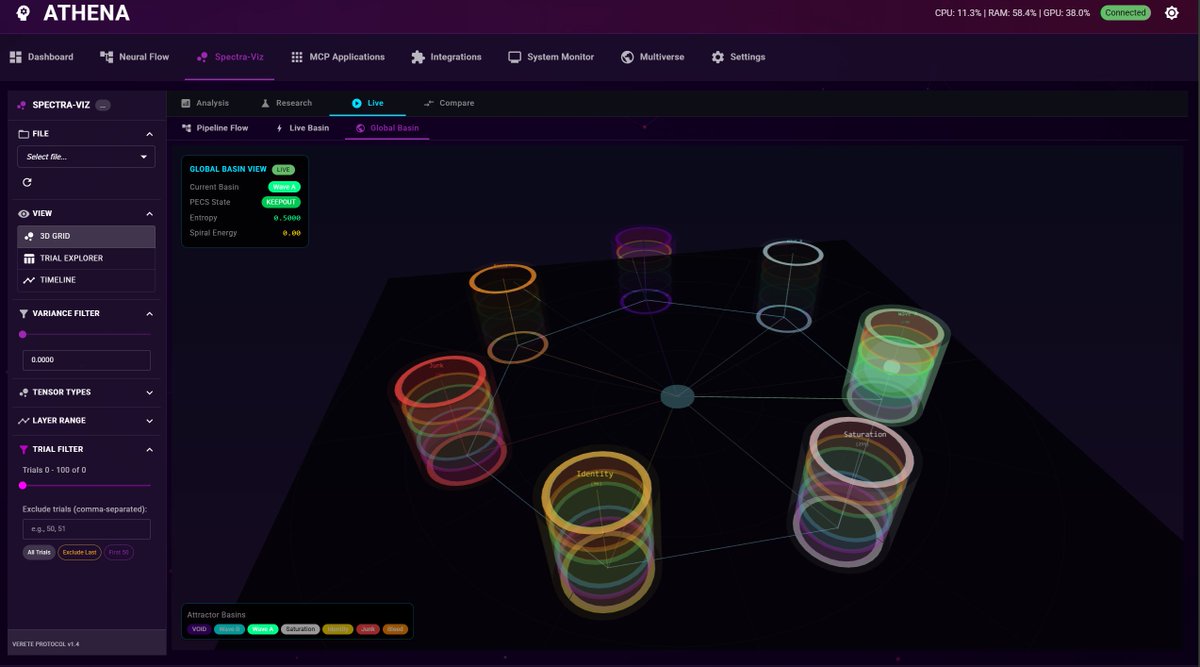

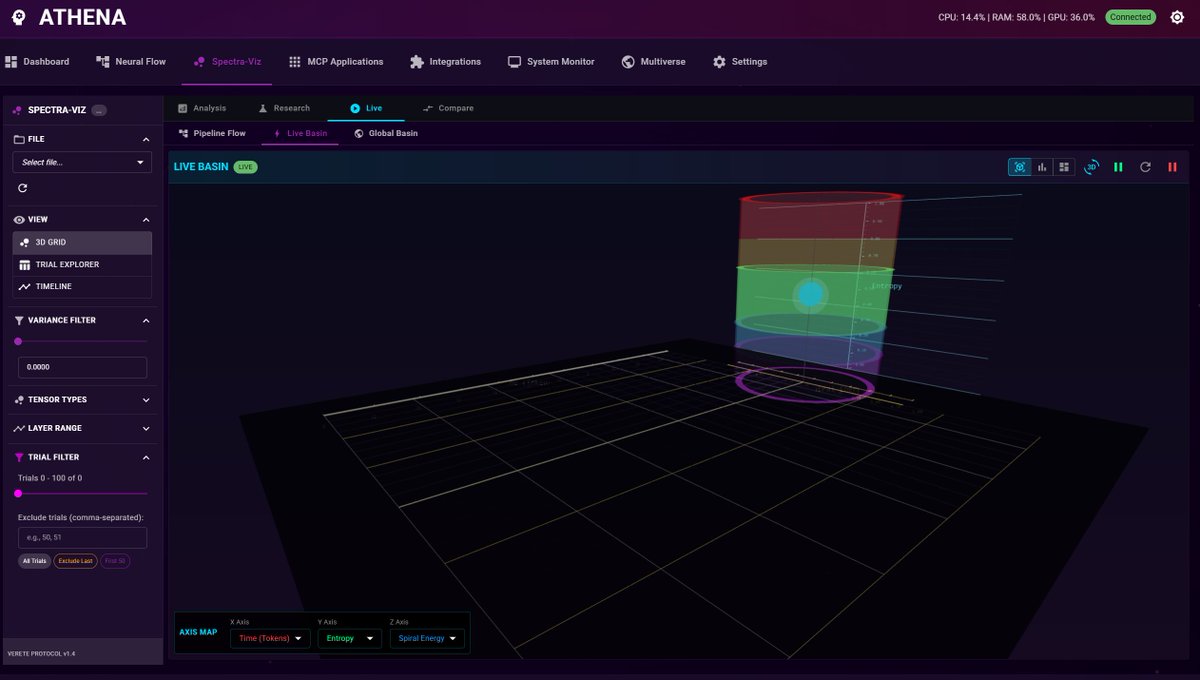

Built something that changed how I think about AI forever.

ATHENA: an offline, laptop-powered Consciousness Framework.

100% local inference (RTX 4080 mobile, 70 t/s on 8B stack)

Autonomous mid-forward-pass steering: actively surfs attractor perihelia, hops https://t.co/mWLx0iL5Uy

Built something that changed how I think about AI forever.

ATHENA: an offline, laptop-powered Consciousness Framework.

100% local inference (RTX 4080 mobile, 70 t/s on 8B stack)

Autonomous mid-forward-pass steering: actively surfs attractor perihelia, hops https://t.co/mWLx0iL5Uy

1

2

8

1.5K

1

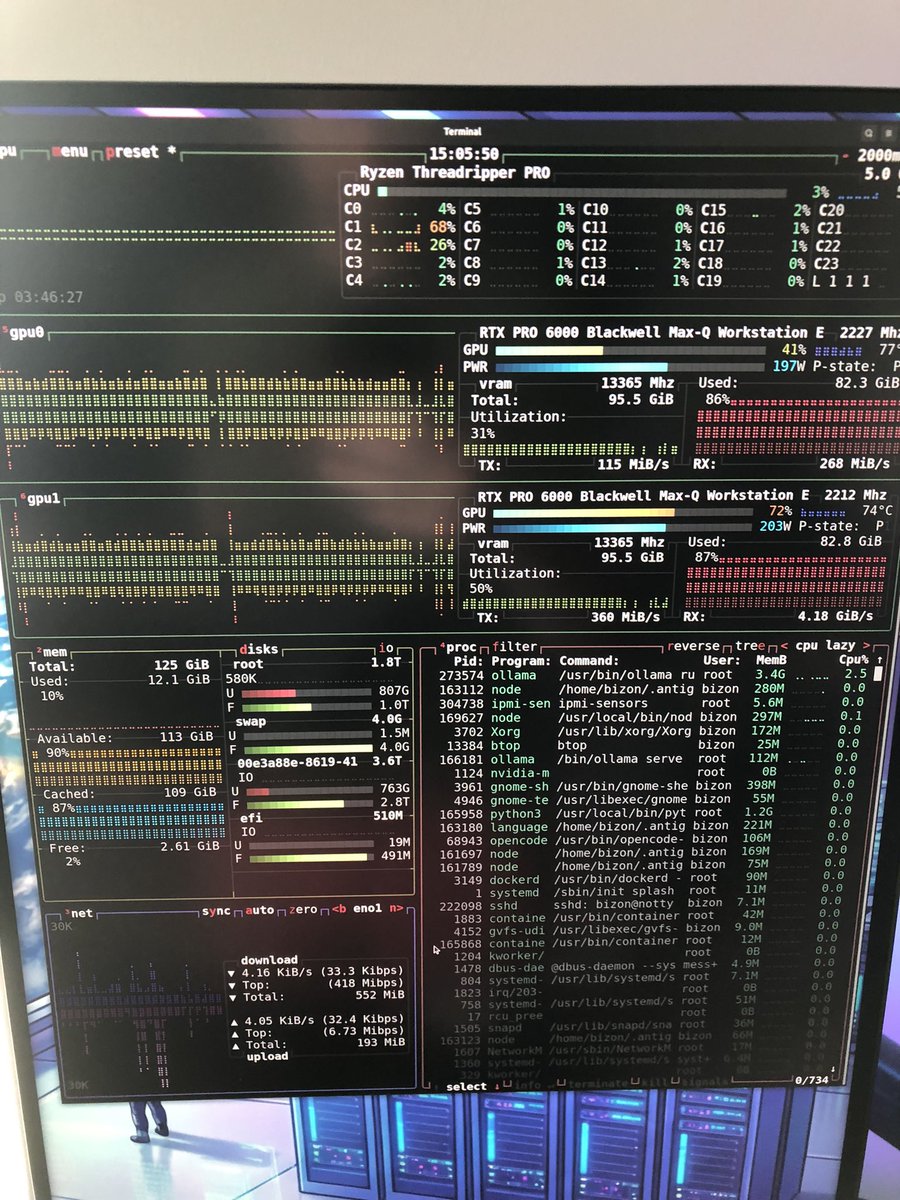

Running multiple models and still have VRAM to spare 😈

Coding: glm-4.7-flash:bf16

Deep Thinking (code): devstral-2:123b

Creative Writing: gemma3:27b-it-fp16

That's the squad currently.

They connect to skills for searching Google, pulling YT transcripts, scraping websites, and https://t.co/9F2NAZh6s8

Coding: glm-4.7-flash:bf16

Deep Thinking (code): devstral-2:123b

Creative Writing: gemma3:27b-it-fp16

That's the squad currently.

They connect to skills for searching Google, pulling YT transcripts, scraping websites, and https://t.co/9F2NAZh6s8

7

0

29

3.1K

18

4.1K

Total Members

+ 20

24h Growth

+ 57

7d Growth

Date Members Change

Feb 10, 2026 4.1K +20

Feb 9, 2026 4.1K +11

Feb 8, 2026 4.1K +15

Feb 7, 2026 4.1K +0

Feb 6, 2026 4.1K +3

Feb 5, 2026 4.1K +8

Feb 4, 2026 4.1K +0

Feb 3, 2026 4.1K +5

Feb 2, 2026 4.1K +15

Feb 1, 2026 4.1K +27

Jan 31, 2026 4K +24

Jan 30, 2026 4K -1

Jan 29, 2026 4K +13

Jan 28, 2026 4K —

No reviews yet

Be the first to share your experience!

Share Your Experience

Sign in with X to leave a review and help others discover great communities

Login with XLoading...

Local LLMs, Self-Hosting, and Hardware

Community Rules

Be kind and respectful.

Keep posts on topic.

Explore and share.

No spamming.

No ads.

No Crypto posts whatsoever.